spark select|what is selectexpr in pyspark : Cebu Learn how to use select () function in Spark SQL to select one or multiple columns, nested columns, column by index, all columns, from the list, by regular expression, or by column name. This web page .

web18 de abr. de 2023 · CHILDREN RUIN EVERYTHING is a Canadian Screen Award-nominated comedy series about parents raising their three young children and .

0 · what is selectexpr in pyspark

1 · spark sql select from

2 · spark select from dataframe

3 · spark select columns from list

4 · spark select column from dataframe

5 · spark select alias

6 · pyspark select columns from list

7 · pyspark select columns by index

OSSD Honors. 2007 - 2011. Activities. • Female Volleyball Team, 2007 & 2011. • Cheer Team, 2008-2010. • Muskoka Wood Orientation Leader, 2008-2011. • Camp Olympia Leader, 2011. Achievements. • Successfully organizing and hosting a leadership weekend at Camp Olympia, 2010-2011.

spark select*******pyspark.sql.DataFrame.select¶ DataFrame.select (* cols: ColumnOrName) → DataFrame [source] ¶ Projects a set of expressions and returns a new DataFrame.

Learn how to use the SELECT statement to retrieve result sets from one or more tables in Spark SQL. See the syntax, parameters, clauses, and exampl. Learn the differences and usage of Spark select () and selectExpr () functions to select columns from DataFrame and Dataset. See examples of UnTyped . In PySpark, select() function is used to select single, multiple, column by index, all columns from the list and the nested columns from a DataFrame, PySpark .SELECT - Spark 3.5.1 Documentation. WHERE clause. Description. The WHERE clause is used to limit the results of the FROM clause of a query or a subquery based on the .

Learn how to use select () function in Spark SQL to select one or multiple columns, nested columns, column by index, all columns, from the list, by regular expression, or by column name. This web page .What's the difference between selecting with a where clause and filtering in Spark? Are there any use cases in which one is more appropriate than the other one? When do I use. DataFrame newdf = . In today’s article we discussed the difference between select() and selectExpr() methods in Spark. We showcased how both can be used to perform .

This post shows how to grab a subset of the DataFrame columns with select. It also shows how to add a column with withColumn and how to add multiple columns .

In Spark SQL, the select () function is the most popular one, that used to select one or multiple columns, nested columns, column by Index, all columns, from the .a SparkDataFrame. a list of columns or single Column or name. . additional column (s) if only one column is specified in col . If more than one column is assigned in col, . should be left empty. name of a Column (without being wrapped by "" ). a Column or an atomic vector in the length of 1 as literal value, or NULL .

spark selectpyspark.sql.DataFrame.selectExpr. ¶. Projects a set of SQL expressions and returns a new DataFrame. This is a variant of select() that accepts SQL expressions. New in version 1.3.0. Changed in version 3.4.0: Supports Spark Connect. A DataFrame with new/old columns transformed by expressions. Created using Sphinx 3.0.4.

pyspark.sql.DataFrame.select. ¶. Projects a set of expressions and returns a new DataFrame. column names (string) or expressions ( Column ). If one of the column names is ‘*’, that column is expanded to include all columns in .

Spark 1.5% Cash Select. Credit Level: Excellent. Earn unlimited 1.5% cash back on every purchase. Plus, earn a $750 cash bonus when you spend $6,000 in the first 3 months. Spark 1.5% Cash Select. Rates & Fees Information. Apply Now.Select all matching rows from the table references. Enabled by default. DISTINCT. Select all matching rows from the table references after removing duplicates in results. named_expression. An expression with an optional assigned name. expression. A combination of one or more values, operators, and SQL functions that evaluates to a . select() pyspark.sql.DataFrame.select() is a transformation function that returns a new DataFrame with the desired columns as specified in the inputs. It accepts a single argument columns that can be a str, Column or list in case you want to select multiple columns. The method projects a set of expressions and will return a new Spark . PySpark selectExpr() is a function of DataFrame that is similar to select (), the difference is it takes a set of SQL expressions in a string to execute. This gives the ability to run SQL like expressions without creating a temporary table and views. selectExpr() just has one signature that takes SQL expression in a String and returns a .Spark SQL lets you query structured data inside Spark programs, using either SQL or a familiar DataFrame API. Usable in Java, Scala, Python and R. results = spark. sql (. "SELECT * FROM people") names = results. map ( lambda p: p.name) Apply functions to results of SQL queries.

The selectExpr function in Spark is a powerful tool that allows you to perform operations on your DataFrame using SQL-like expressions. This means you can use it to apply any kind of SQL expressions or functions that you are comfortable with, including aggregations, filtering, and even complex transformations.Spark SQL is a Spark module for structured data processing. Unlike the basic Spark RDD API, the interfaces provided by Spark SQL provide Spark with more information about the structure of both the data and the computation being performed. Internally, Spark SQL uses this extra information to perform extra optimizations.

PySpark —— select()方法的作用是什么 在本文中,我们将介绍PySpark中的.select()方法的作用和用法。PySpark是Apache Spark的Python API,用于大数据处理和分析。.select()方法是在DataFrame中选择特定列或变换现有列的常用方法之一。 阅读更多:PySpark 教程 .select()方法的基本用法 .select()方法用

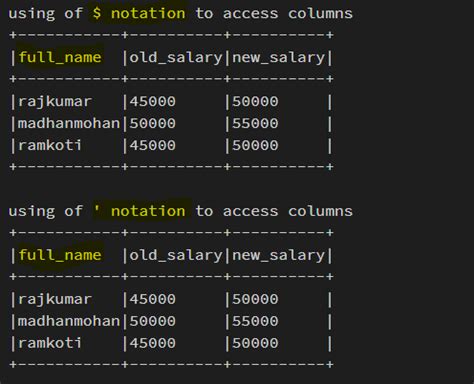

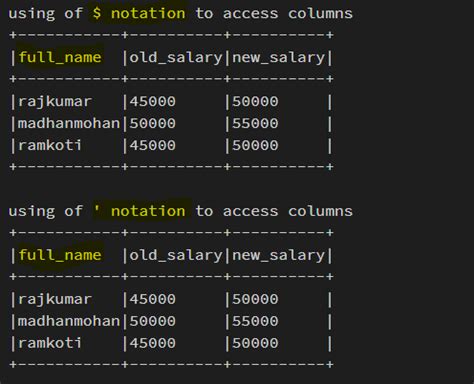

what is selectexpr in pysparkIn this blog post, we will explore different ways to select columns in PySpark DataFrames, accompanied by example code for better understanding. 1. Selecting Columns using column names. The select function is the most straightforward way to select columns from a DataFrame. You can specify the columns by their names as arguments or by using the .spark select what is selectexpr in pysparkIn this blog post, we will explore different ways to select columns in PySpark DataFrames, accompanied by example code for better understanding. 1. Selecting Columns using column names. The select function is the most straightforward way to select columns from a DataFrame. You can specify the columns by their names as arguments or by using the . In Spark SQL, the select() function is the most popular one, that used to select one or multiple columns, nested columns, column by Index, all columns, from the list, by regular expression from a DataFrame. select() is a transformation function in Spark and returns a new DataFrame with the selected columns.SelectExpr - selectとexprのショートカット. SelectとExprは、Sparkデータフレームを操作する際に広く使用されており、Sparkチームは簡単にそれらを利用できるようにしています。. selectExpr 関数を使うことができます。. Python. df_csv.selectExpr("count", "count > 10 as if_greater .

PySpark —— select()方法的作用是什么 在本文中,我们将介绍PySpark中的.select()方法的作用和用法。PySpark是Apache Spark的Python API,用于大数据处理和分析。.select()方法是在DataFrame中选择特定列或变换现有列的常用方法之一。 阅读更多:PySpark 教程 .select()方法的基本用法 .select()方法用In this blog post, we will explore different ways to select columns in PySpark DataFrames, accompanied by example code for better understanding. 1. Selecting Columns using column names. The select function is the most straightforward way to select columns from a DataFrame. You can specify the columns by their names as arguments or by using the .

In Spark SQL, the select() function is the most popular one, that used to select one or multiple columns, nested columns, column by Index, all columns, from the list, by regular expression from a .

SelectExpr - selectとexprのショートカット. SelectとExprは、Sparkデータフレームを操作する際に広く使用されており、Sparkチームは簡単にそれらを利用できるようにしています。. selectExpr 関数を使うことができます。. Python. df_csv.selectExpr("count", "count > 10 as if_greater .Spark supports SELECT statement that is used to retrieve rows from one or more tables according to the specified clauses. The full syntax and brief description of supported clauses are explained in SELECT section. The SQL statements related to SELECT are also included in this section.

The select method can be used to grab a subset of columns, rename columns, or append columns. It’s a powerful method that has a variety of applications. withColumn is useful for adding a single column. It shouldn’t be chained when adding multiple columns (fine to chain a few times, but shouldn’t be chained hundreds of times).

Spark SQL Explained with Examples. Home » Apache Spark » Spark SQL Explained with Examples. Naveen Nelamali. Apache Spark / Member. April 24, 2024. 13 mins read. Access to this content is reserved for our valued members.

68. Actions vs Transformations. Collect (Action) - Return all the elements of the dataset as an array at the driver program. This is usually useful after a filter or other operation that returns a sufficiently small subset of the data. spark-sql doc. select (*cols) (transformation) - Projects a set of expressions and returns a new DataFrame.

The function returns NULL if the index exceeds the length of the array and spark.sql.ansi.enabled is set to false. If spark.sql.ansi.enabled is set to true, it throws ArrayIndexOutOfBoundsException for invalid indices. Examples: > SELECT elt (1, 'scala', 'java'); scala > SELECT elt (2, 'a', 1); 1.Using Spark SQL in Spark Applications. The SparkSession, introduced in Spark 2.0, provides a unified entry point for programming Spark with the Structured APIs. You can use a SparkSession to access Spark functionality: just import the class and create an instance in your code.. To issue any SQL query, use the sql() method on the SparkSession .

Dynamically select the columns in a Spark dataframe. 0. Pyspark dynamic column selection from dataframe. Hot Network Questions How can non-residents apply for rejsegaranti with Nordjyllands Trafikselskab? Find 10 float64s that give the least accurate sum Meaning of "virō" in description of Lavinia . The dataset in ss.csv contains some columns I am interested in:. ss_ = spark.read.csv("ss.csv", header= True, inferSchema = True) ss_.columns ['Reporting Area', 'MMWR Year', 'MMWR Week', 'Salmonellosis (excluding Paratyphoid fever andTyphoid fever)†, Current week', 'Salmonellosis (excluding Paratyphoid fever andTyphoid fever)†, .

webSweet Young Girl Cock Addicted of her Stepbrother. 72.4k 100% 8min - 1080p. How Teens Celebrate Independence. 43.7k 92% 8min - 1080p. Fucking slutty. 68.1k 100% 8min - 720p. Gorgeous Sweet Babe Fuck her StepBrother's Boner. 11.3k 75% 8min - 1080p. American Guy Rammed his Little Stepsister from Asia.

spark select|what is selectexpr in pyspark